Deep Dive: Creating and fine tuning the save system of Shadow Gambit

Here are five key takeaways from the technical process behind this programming challenge.

Game Developer Deep Dives are an ongoing series with the goal of shedding light on specific design, art, or technical features within a video game in order to show how seemingly simple, fundamental design decisions aren’t really that simple at all.

Earlier installments cover topics such as how indie developer Mike Sennott cultivated random elements in the branching narrative of Astronaut: The Best, how the developers of Meet Your Maker avoided crunch by adopting smart production practices, and how the team behind Dead Cells turned the game into a franchise by embracing people-first values.

In this edition, we learn how Mimimi Games created their save scum-friendly save system in Shadow Gambit: The Cursed Crew with senior developer Phillip Wittershagen.

Hi, I'm Philipp Wittershagen, senior developer at the small, independent German game studio Mimimi Games. We're best known for reviving the stealth-strategy genre with Shadow Tactics: Blades of the Shogun to critical acclaim, following up that success with Desperados III and, most recently, our most ambitious and self-published title Shadow Gambit: The Cursed Crew, which, although again released to critical acclaim, will sadly be our final game.

The groundwork for the later titles was laid by a very small development department of four people at the time of creating Shadow Tactics. Though many things were optimized later on, the architecture of the save system was defined by then and was therefore influenced by the small team size and a requirement to be ultra-efficient.

You might think, "Most games have a save system. What's so special about yours?" Let me give you a short introduction to the stealth-strategy genre and what special demands it places on a save system, after which I'll describe how our system works, how we tackled the requirements, and how we optimized it all for production.

The stealth-strategy genre

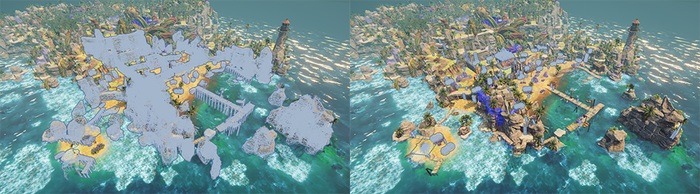

Image provided by publisher.

The stealth-strategy genre, and especially the productions by Mimimi Games, feature a top-down perspective over maps of around 200x200m. This might not sound like much; however, they are densely packed with enemies and interactions. While the levels of our previous games were filled with at most 100 NPCs, Shadow Gambit, with its more open structure, sometimes has up to 250 NPCs on a single map. All these NPCs have their own detection, routines, and AI-behavior running, which the player can scroll over and analyze at all times. Add to this up to eight (though in most cases it's three) player-controlled characters with skills that can be planned and executed simultaneously, as well as various scripted events and interactions with the map that again can have all kinds of complicated logics and freely trigger cutscenes, animations or quest-updates.

Stealth games have always been prone to save scumming, though with multiple player characters and the diverse skill system, our games actively embrace this, encouraging players to try out different approaches and save and load often.

Image provided by publisher.

All this sets certain requirements for our save system:

Reliably save everything at every moment in time–be it the current behavior of every single NPC on the map, interactions with usable objects, skills in execution and animation states of characters, or even scripted map events created by level design.

Save fast–as saving is most likely triggered often.

Load even faster.

Maintain reliability during development, as level design will continuously need it for testing.

Don't generate too much overhead for the development department, as the small team still has to be ultra-efficient to ship the ambitious projects.

One preliminary decision

For the harbor area, all dynamic objects in the save-roots are visualized on the right (vs static objects on the left). These are saved with all their components and fields and are destroyed and fully reconstructed on every load. Images provided by publisher.

To allow the team to move fast and efficiently, and to reliably save all dynamic information, one basic decision was made early in development: we would define certain root objects in the scene (so-called "save-roots"), which would introduce all its sub-objects to the save system together with all their components. All these objects, including every field referenced by them, are saved by default if not explicitly marked not to be saved.

This resulted in having our developers write code in a specific, though still efficient way (some built-in C# classes like HashSet or Func are not supported), but at the same time led to a very remarkable result:

If the code uses features that somehow cannot be saved, it would most likely immediately break the game after the first attempt to save-load.

If the code doesn't use unsupported features, the save-load will succeed with the hard guarantee that every saved object with every saved field could be generated again by the load process.

As it wasn't exactly clear in the beginning which features were not supported, over the course of a few months, every programmer would have a tool running that would warn the user if save-load had not been tested for more than a few minutes. This ensured that all developers were automatically learning which language features they should refrain from using.

Going in-depth

The creation of a correct save-load system that allows for all this custom code to automatically be saved and loaded is no trivial task, so let's see how we approached it and what decisions we made along the way. While I try to describe the system in an engine-independent matter, this will not always be possible. Please just keep in mind that at Mimimi Games, we use the Unity3D-Engine and C# as our programming language. While some concepts like reflection can also be introduced to C++-Engines by means of magic macros, concepts like value- and reference types just don't exist in some other languages.

Saving

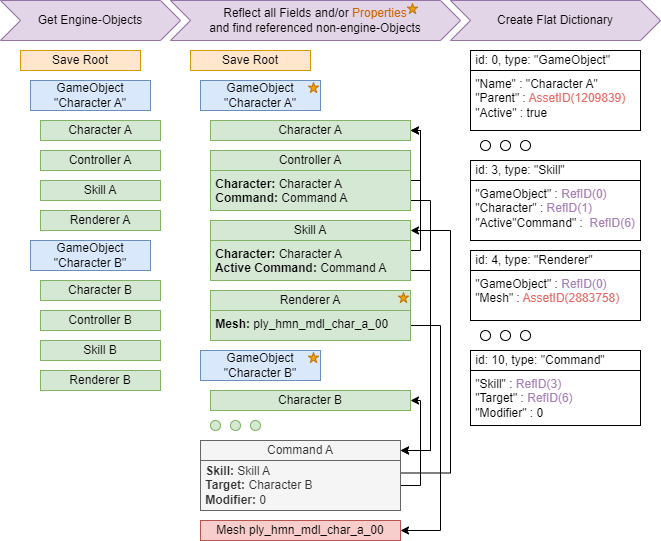

We start by gathering all the GameObjects and Components that are children of our defined save-root objects. These are then reflected for either their properties or their fields. Some objects that are found in this process can also have super-custom serialization methods.

Image provided by publisher.

Depending on the type of an object, one of three distinct serialization methods is used:

Reflection for Properties: Mostly predefined in-engine objects or components of third-party packages where public properties often define an API and are sufficient to recreate the objects.

Reflection for Fields (also non-public): Mostly our custom-written components, where we don't strive for an API that covers all internal processes, but at the same time want to preserve all the internal structure.

Custom Serialization Method: Coroutine-Objects (coroutines at Mimimi may never be started without a reference-object as otherwise, the save-system isn't aware of them), Delegates, and Materials.

As we also have to save objects that are not registered in the engine, we recursively add new objects we find inside these fields and properties to the reflection process. We hereby want to ignore objects we can define as "Assets" (i.e., Meshes, Graphics or mere Setting Containers). Our engine sadly does not provide any way during runtime (though it does during development) to distinguish asset objects from others, so we had to introduce another step that runs before the game is built. With the help of reflection, similar to the save process, we recursively gather all the asset objects that are used in a scene, save them to a huge dictionary inside the scene and assign them unique IDs. To simplify the loading process a little, we also add all save-roots to this dictionary, as they should be handled similar to assets.

For all non-asset objects, we create small, simple data structures—also known as Passive Data Structures (PDS) or Plain Old Data (POD), as they don't have any object-oriented features anymore—

by replacing all references with either so-called RefIDs (IDs to other objects in the save file) or AssetIDs (IDs to assets). This simple data structure can then easily be serialized in various formats. To ensure compatibility with all our target platforms and the possibility for optimizations, we decided to write our own simple serializer that comes in a text as well as a binary version.

Coroutines, Delegates and Materials were a continuous challenge (we will see one instance later in the Patching section), though by also being able to save coroutines, we could easily save all kinds of asynchronous code. If all our developers had to introduce state variables every time they were waiting for some external property to change or just had to wait for timed delays, I'm sure we would never have been able to release these games in the state we did.

Loading

In the load process, we first clean up all our save-roots and recreate all objects from the Passive Data Structure. Afterwards, all saved properties and fields are set via reflection, analogous to the serialization process. Custom deserialization methods are used where needed.

Image provided by publisher.

Obviously, it's not all that simple, as not all built-in functions of the game engine are bound to GameObjects or can be restored that easily. I'll just list various special cases here, which all needed additional code either during saving, loading or both:

Coroutines: They have a custom serialization method and are started from their current enumerator position after loading.

Time: We want the time to continue at the moment of the save, so we added a singleton component wrapping the internal time methods.

Random: We restore the seed after loads.

Materials: We have to magically map material instances to their asset sources, which ended up requiring by-name mapping.

Internal awake-state of engine objects: Think of how some methods are automatically called after an object is enabled the first time (i.e. Awake or Start). These should not trigger after loading the game, or at least should not impact our code.

Physics: Similar to the awake state, physics objects also have some internal state. We have to ignore trigger and collision callbacks that happen in succession to the load process.

Static fields: We include them in all objects we find as if they were just normal fields (we previously assumed not having to save them at all, but quickly realized this would cause instability and memory leaks). This theoretically leads to them being saved and loaded multiple times, though we discourage using statics anyway, except in our basic singleton classes, which exist only once.

Structs: I silently ignored them in the samples I provided earlier to keep it simple. They share some code with the save process of custom reference types, but are specially handled in the load process, where they're deserialized before other objects.

Optimizations, optimizations, optimizations

I think after all these technical descriptions, now's a great moment to reevaluate the requirements for the save system we stated in the genre introduction:

(Done) Reliably save everything at every moment in time: We spotted all the special cases we need to handle and were able to build a solid, stable save-load-system

Save fast–as saving is most likely triggered often.

Load even faster.

(Done) Maintain reliability during development: By setting the default to save everything, we detect errors fast; level-design can use the save-system also during development, with errors being a rare sight.

(Done) Don't generate too much overhead for the development department: Our developers first had to learn how code has to be written for the save-system to work (which classes are supported and which are not), but after a short induction period, the save-system does not impose any overhead to the day-to-day work.

Even before reading this list and noticing the missing "(Done)" tags in certain lines, the experienced C#-developer might have felt increasing doubts about the performance of such a system with every instance of the word "reflection" used in this article. While we were surprised by its speed, reflection is not especially famous for it. And although the current state was okay-ish for development, it was outright disastrous for production. So begins a tedious but necessary task of optimizing every inch possible.

Searching for the culprit

The first step in every optimization attempt should always be to profile and find the worst contributor. In addition to the obvious culprit, "reflection," we spotted a few other candidates:

The text serialization and the file size to be saved on and loaded from disc: We switched to a binary serialization format and additionally compressed the save files with GZip.

C# type deserialization: We now save type names as IDs and cache their C#-object on first occurrence. This leads to the first save-load per session being slightly slower in favor of a speedup for the following ones.

Regeneration of in-engine-objects

The first two issues were quite easily solved, which is why I quickly described our solutions and won't discuss them further. Let's now look into the (also quite obvious) optimization we did for the performance of reflection.

Optimizing reflection

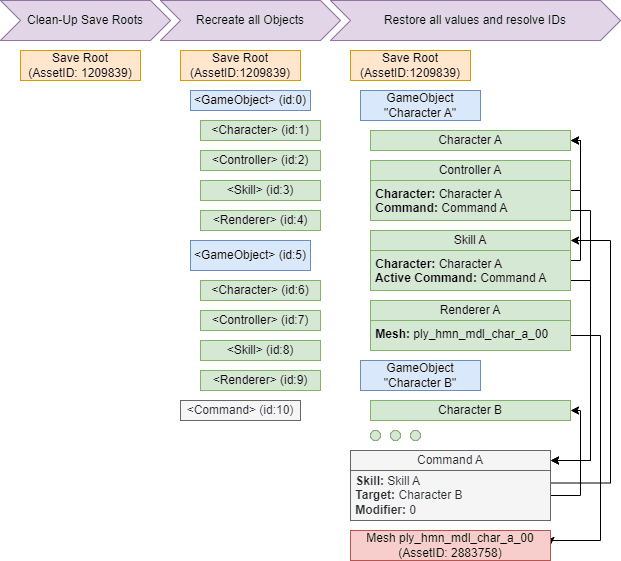

The classic path to building a save-load system in games is to define a common savable base class that exposes some "serialize" and "deserialize" methods and passing them some kind of database-like object. Inside these methods, all deriving objects can then store or retrieve the data they want to save or load by some IDs. They could look something like this:

Image provided by publisher.

As we used reflection for everything, we didn't have these kinds of methods. To be able to restore the internal state of the in-engine-objects, we did already have a common base class for all savable components, though. Writing these methods by hand would clearly go against our defined goal of "not generating too much overhead", but with a stable system in place and our reflection-based system not requiring any runtime information, we can easily generate these methods in the build process. In addition to generating the methods, we were now able to micro-optimize the type resolution and use pre-calculated hashes of the field names as IDs.

Generating the serialize and deserialize methods played a huge role in getting to more user-friendly load times. On the left you see the load-process during development with most optimizations disabled, on the right is the final build. The serialize and deserialize methods themselves gave us a speedup of about 1.5s. Image provided publisher.

Optimizing the regeneration of in-engine objects

A performance bottleneck we didn't foresee emerged in the regeneration of in-engine objects and components. The methods used for this in Unity3D take disproportionately long. This is probably due to the bridge between C# and the C++ engine that every call has to pass. With this theory in mind, we tried to utilize another method that also yields new objects and components: instantiating prefabs with many components.

The time it takes to create in-engine-objects seems to indeed be pretty much proportional to the number of calls to the engine, so for objects with many components, the instantiate call is way faster. This call can not only create prefabs but also duplicate other objects in the scene. Since we knew what our objects in the scene looked like and what kind of components they contained, we anticipated a solution to the problem at hand. Similar objects in our scenes contain similar components: this is true for many of the up to 250 NPCs; this is true for our player-characters; this is even to some extent true for many of the logics that our level-designers build, as they're made of multiple GameObjects and often contain exactly one command-component per GameObject.

With this knowledge, we sketched up an experiment: If we created template-objects with certain components, we then would only need to duplicate them in case an object with this set of components is loaded. This spares us all the individual calls to add components. Even if most objects only had one component, it would still cut the number of required calls in half, but our NPCs in Shadow Gambit have about 100 components each. The number of calls to the engine and, therefore, also the amount of time we saved here was enormous. Later, we even added pre-generation of the template-copies after the first load. We now already anticipate the next load and, during the normal gameplay, pre-generate multiple copies of the template-objects based on the amount we saw in the player's previous load.

Image provided by publisher.

All this optimization led to our final save and load times of just a few seconds, even on our largest maps. This would finally comply with our set goals and ensure a smooth player experience.

Patching

At Mimimi, we always strive to release our games as bug-free as possible, but there will always be issues that either slip through QA or turn out to be more severe than we estimated. When patching a game, one doesn't want to break the save-games of current players, as this often leads to players abandoning the game altogether. After failing to ensure save-game compatibility in our first patch for Shadow Tactics, we built tooling to ensure this would not happen again and had to adjust certain parts of our save code for our following projects.

There were two events that most often would lead to our save code being incompatible after patches:

Any change in the list of assets that are required by a level.

Any change in the code that is generated for coroutines.

Change in asset dependencies

This kind of issue, most of the time, originated in level design having to change small areas of a scene, especially when scripting did not work as intended in the released version or when dependencies were incorrectly connected. Imagine an NPC that should play a voice line when approached by the player. In the released version, someone notices the voice doesn't actually match the scene and files a bug report. When reviewing the connections that were set up, we realize a wrong sound file is connected, but replacing it would remove the dependency to the old sound file. This would lead to the save system no longer being able to resolve all assets from saves made before the change. This is easily fixable by just keeping the connection to the old sound file somewhere in the scene, but it's important that we actually detect when changes like this happen so we can act appropriately. We added an action in the build pipeline to list the dependencies of each scene and warn us in case breaking changes happen.

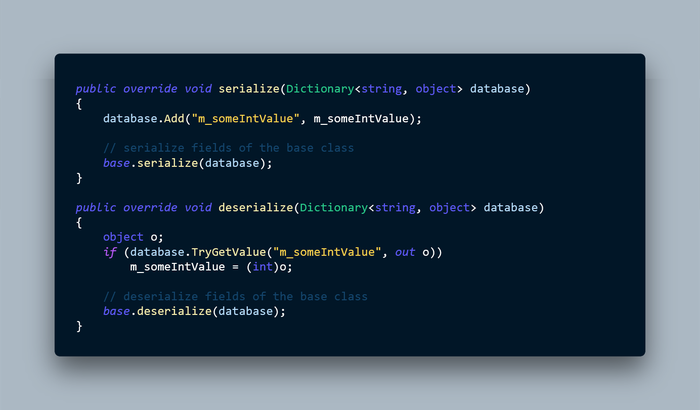

Change in generated coroutine code

When looking at decompilations of our code, we often see compiler-generated objects for our coroutines. Let's look at some code to better understand what's happening here. It's an excerpt of the Dark Excision skill of the playable character Zagan from the DLC Shadow Gambit: Zagan’s Wish, which just released on December 6th.

Image provided by publisher.

We have a coroutine there that plays various animations. As we define local fields inside the coroutine, some object is needed to hold these values. To do this, the compiler generates a new class for us called "<coroAnimation>d__4". This is also the object we need to save for currently running coroutines. The type of this object ("<coroAnimation>d__4") should not change if we patch the game (i.e. to "<coroAnimation>d__5"), as otherwise, we can't correctly restore the coroutine—something that happened quite extensively in our patch for Shadow Tactics. But what exactly does change its type? We realized that the number in the type's name is dependent on the number of fields, properties, or methods that occur in the same class before the coroutine:

0: m_actionExecuteLoop

1: m_actionExecuteEnd

2: m_coroAnimation

3: playPostExecutionAnimation

4: coroAnimation → leading to the class name <coroAnimation>d__4

This also means we're safe to patch changes into our code, unless we add them before any coroutine. We went with adding a special section at the bottom of our source files for every patch we create, where new fields and methods would reside. We additionally added another post build action where an external application checks all our built assemblies for changes in the names of the generated coroutine objects.

One would think it should not be too hard to abide by the rules during the patching phase, but the truth is that errors happen quite easily in daily life, and the safety checks in the build pipeline saved us from releasing a defective patch more than once.

Takeaways

I hope you found diving into our save system interesting and can take some learnings with you. For me, there are five points I want to emphasize:

Respect programmers' time and remove possible friction; for us, that meant:

Default to save everything without need for additional code.

Keep the special rules to a minimum (i.e., allowing save-load of coroutines).

Optimization does not have to be built in from the start: If in the end you know all your requirements, optimizations of specific bottlenecks can often be easier to implement.

Be conscious that errors just happen in daily life: You should always have pipelines that prevent them from slipping through.

I really loved working on the save system as well as on stealth-strategy games altogether and hope this genre will continue to flourish even without the pioneering projects from Mimimi Games.

About the Author(s)

You May Also Like